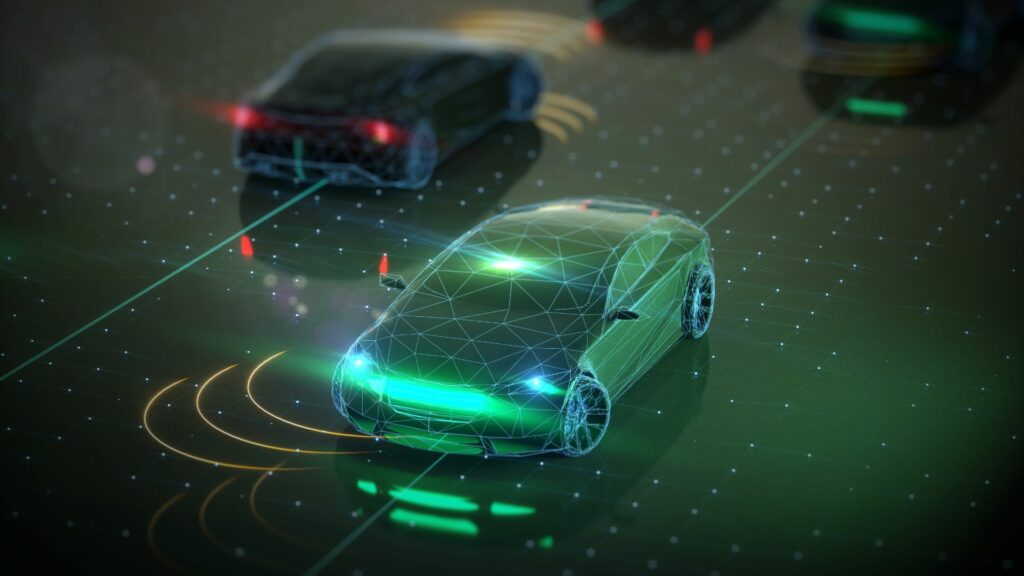

Multisensor fusion is a critical component in the environmental perception systems of driverless vehicles, enhancing safety and decision-making capabilities. By integrating data from various sensors such as cameras, LiDAR, radar, and ultrasonic devices, these systems can achieve a more comprehensive and accurate global positioning accuracy and overall system performance in different scenarios.

What are the frequently used sensing methods?

The frequently used sensing methods in environmental perception systems for autonomous vehicles include:

- Cameras: provide rich visual information, including color and texture, essential for object recognition and classification.

- LiDAR (Light Detection and Ranging): Offers precise 3D mapping of the environment, crucial for detecting obstacles and measuring distances.

- Radar: Effective in measuring the speed and position of objects, especially in adverse weather conditions.

- Ultrasonic Sensors: Typically used for short-range detection, aiding in parking and low-speed maneuvers.

Each of these sensors has its strengths and limitations. For instance, while cameras excel in capturing detailed visual information, their performance can degrade in low-light conditions.

LiDAR provides accurate distance measurements but can be expensive and sensitive to weather conditions.

Radar systems are robust in various weather scenarios but may lack detailed environmental features. Ultrasonic sensors are limited to short-range applications.

By fusing data from these diverse sensors, driverless vehicles can mitigate individual sensor limitations, leading to a more reliable and accurate environmental perception. This multisensor approach enhances the vehicle’s ability to detect and respond to dynamic elements in its environment, thereby improving overall safety and operational efficiency.

However, implementing multisensor fusion presents challenges, including:

- Data Synchronization: Aligning data streams from sensors operating at different frequencies and latencies.

- Calibration: Ensuring accurate spatial and temporal alignment between sensors to maintain data consistency.

- Data Processing: Managing and processing large volumes of data in real-time to support immediate decision-making.

Addressing these challenges is crucial for the advancement of autonomous driving technologies. Ongoing research focuses on developing more efficient fusion algorithms, improving sensor technologies, and enhancing system robustness to ensure safe and reliable driverless vehicle operations.

In summary, multisensor fusion is indispensable for the environmental perception systems of driverless vehicles, providing a holistic and accurate understanding of the driving environment. Overcoming the associated challenges will pave the way for safer and more efficient autonomous transportation solutions.